Overview

The goal is to implement Devops best practices to run Terraform in Jenkins Pipelines. We will go over the main concepts that need to be considered and a Jenkinsfile that runs Terraform. The Jenkinsfile will consists of parameters that allows us to pass data as variables in our pipeline job.

- Install Terraform on Jenkins Server

- Install Terraform Plugin on Jenkins

- Configure Terraform

- Store and Encrypt Credentials in Jenkins

- Setting up CD Pipeline with Terraform to Deploy App Servers

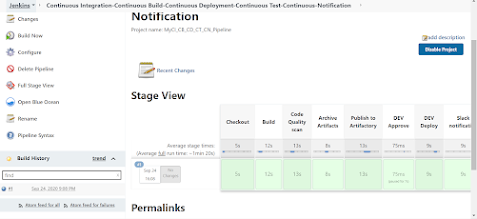

- Run Pipeline Job

Install Terraform on Jenkins Server

Use the following commands to install Terraform on Jenkins server and move the binaries to the correct path as shown below.

- wget https://releases.hashicorp.com/terraform/0.12.24/terraform_0.12.24_linux_amd64.zip

- unzip terraform_0.12.24_linux_amd64.zip

- sudo mv terraform /usr/bin/

Install Terraform plugin on Jenkins

Go to Manage Jenkins > Manage Plugins >Available > search Terraform as shown below:

As you can see, Terraform Plugin is already installed on my Jenkins hence why it's displayed in the Installed section.

Store and Encrypt Credentials in Jenkins (Access and Secret Key)

In this step, we will be storing and encrypting the access and secret key in Jenkins to maximize security and minimize the chances of exposing our credentials.

- Go to Manage Jenkins > Manage Credentials > Click on Jenkins the highlighted link as shown below

- Select Add Credentials

- Choose Secret text in the Kind field

- Enter the following below:

- Secret = EnterYourSecretKeyHere

- ID = AWS_SECRET_ACCESS_KEY

- Description = AWS_SECRET_ACCESS_KEY

- Secret = EnterYourAccessIDHere

- ID = AWS_ACCESS_KEY_ID

- Description = AWS_ACCESS_KEY_ID

Click OK

Configure Terraform

Go to Manage Jenkins > Global Tool Configuration > It will display Terraform on the list.

- Enter terraform in the Name field

- Provide the path /usr/bin/ as shown below

- Go to Jenkins > New Items. Enter terraform-pipeline in name field > Choose Pipeline > Click OK

Bitbucket Changes

- Create a new Bitbucket Repo and call it terraform-pipeline

- Go to Repository Settings after creation and select Webhooks

- Click Add Webhooks

- Enter tf_token as the Title

- Copy and paste the url as shown below

- Status should be active

- Click on skip certificate verification

- triggers --> repository push

- Go back to Jenkins, select Pipeline Script From SCM

- Enter credentials for Bitbucket, Leave the Branch as blank, Make sure script path is Jenkinsfile

- Right click on Pipeline Syntax and open in a new tab.

- Choose Checkout from Version Control in the Sample Step field

- Enter Bitbucket Repository URL and Credentials, leave the branches blank

- Click GENERATE PIPELINE SCRIPT, copy credentialsId and url (This is required for Jenkinsfile script)

Open File Explorer, navigate to Desktop and create a folder cd_pipeline

Once folder has been created, open Visual Code Studio and add folder to workspace

- Open the Terminal

- Navigate to terraform-pipeline repo in Bitbucket

- Run the command before cloning repo: git init

- Clone the repo with SSH or HTTPS

Create a new file main.tf and copy the below code in yellow color

provider "aws" {

region = var.region

version = "~> 2.0"

}

resource "aws_instance" "ec2" {

user_data = base64encode(file("deploy.sh"))

ami = "ami-0782e9ee97725263d" ##Change AMI to meet OS requirement as needed.

root_block_device {

volume_type = "gp2"

volume_size = 200

delete_on_termination = true

encrypted = true

}

tags = {

Name = "u2-${var.environment}-${var.application}"

CreatedBy = var.launched_by

Application = var.application

OS = var.os

Environment = var.environment

}

instance_type = var.instance_type

key_name = "Enter_KEYPAIR_Name_Here"

vpc_security_group_ids = [aws_security_group.ec2_SecurityGroups.id]

}

output "ec2_ip" {

value = [aws_instance.ec2.*.private_ip]

}

output "ec2_ip_public" {

value = [aws_instance.ec2.*.public_ip]

}

output "ec2_name" {

value = [aws_instance.ec2.*.tags.Name]

}

output "ec2_instance_id" {

value = aws_instance.ec2.*.id

}

- Create a new file security.tf and copy the below code in yellow color

name = "u2-${var.environment}-sg-${var.application}"

description = "EC2 SG"

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 8081

to_port = 8081

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

from_port = 8082

to_port = 8082

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

#Allow all outbound

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

- Create a new file variable.tf and copy the below code in yellow color.

variable region {

type = string

default = "us-east-2"

}

variable "instance_type" {}

variable "application" {}

variable "environment" {}

############## tags

variable os {

type = string

default = "Ubuntu"

}

variable launched_by {

type = string

default = "USER"

}

############## end tags

- Create a new file deploy.sh and copy the below code in yellow color.

- Create a new file Jenkinsfile and copy the below code in yellow color.

- Commit and push code changes to Repo with the following:

- In Vscode, navigate to Source Code Icon on the right tabs on the side

- Enter commit message

- Click the + icon to stage changes

- Push changes by clicking on the 🔄0 ⬇️ 1 ⬆️ as shown below

- Go to terraform-pipeline on Jenkins and run build

- Enter Artifactory in the AppName field

- Select a Branch/Lifecycle to deploy server

- Choose t2.small or t2.medium for Artifactory server.

- Go to Console Output to track progress